Unit I

Definition

Image processing is a method to perform some operations on an image to get an enhanced image, or to extract some useful information from it.

Digital image processing is manipulation of the digital images by using computers.

Fundamental Steps Involved

Image acquisition ⟶ acquire image in the digital form from the camera, or storage.

Image enhancement ⟶ subjective enhancement of the image to improve the aesthetics of the image. Brightness, contrast, color adjustment, and saturation are some examples.

Image restoration ⟶ objective restoration of image using well defined mathematical and/or probabilistic models of image degradation.

Color image processing ⟶ color modeling and processing in a digital domain.

Wavelets and multi-resolution processing ⟶ foundation for representing images in various degrees of resolution. Images subdivision successively into smaller regions for data compression and for pyramidal representation.

Compression ⟶ reducing the storage required to save an image or the bandwidth to transmit it.

Morphological processing ⟶ tools for extracting image components that are useful in the representation and description of shape.

Segmentation ⟶ partition an image into its constituent parts or objects. One of the most difficult tasks in DIP.

Representation and description ⟶ transforming raw data into a form suitable for subsequent computer processing. Description deals with extracting attributes that result in some quantitative information of interest or are basic for differentiating one class of objects from another.

Object recognition ⟶ process that assigns a label. Example: assigning

vehiclelabel to an object based on its descriptors.Knowledge base ⟶ inference from the processed image. It can involve anything from simple process of detailing regions of an image where the information of interest is known to be located, to complex process of generation interrelated list of all major possible defects in a materials inspection problem.

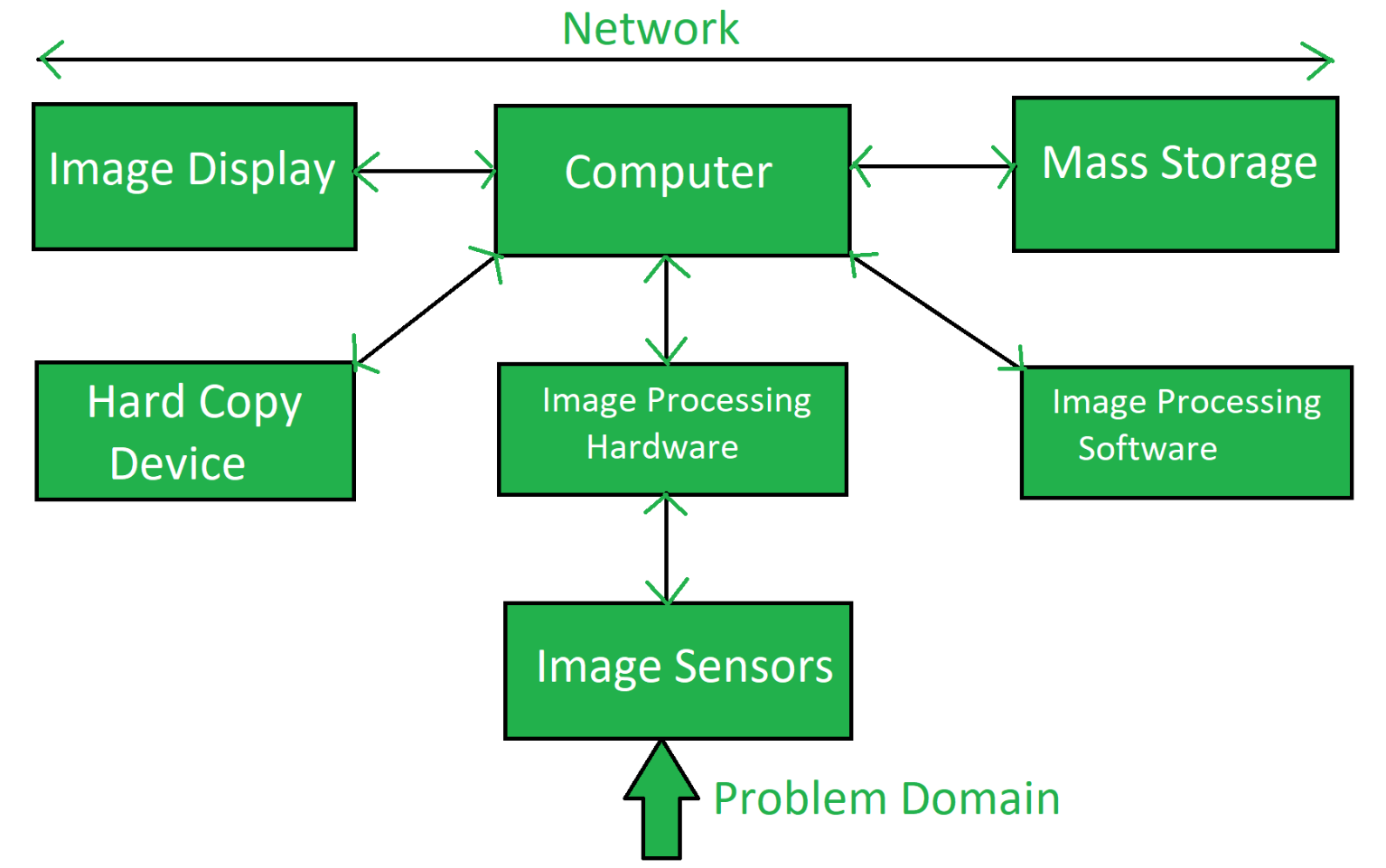

Components of an Image Processing System

Image sensors ⟶ senses the intensity, amplitude, co-ordinates, and other features of the images and passes the result to the image processing hardware. It includes the problem domain.

Image processing hardware ⟶ dedicated hardware that is used to process the instructions obtained from the image sensors.

Computer ⟶ image processing hardware which runs the image processing software.

Image processing software ⟶ software that includes all the mechanisms and algorithms that are used in an image processing system.

Mass storage ⟶ stores the pixels of the images during the processing.

Hard copy device ⟶ permanent storage of processed output. It can be an SSD, pen drive, or any external ROM device.

Image display ⟶ the monitor or display screen that displays the processed images.

Network ⟶ connection of all the above elements of the image processing system.

Elements of Visual Perception

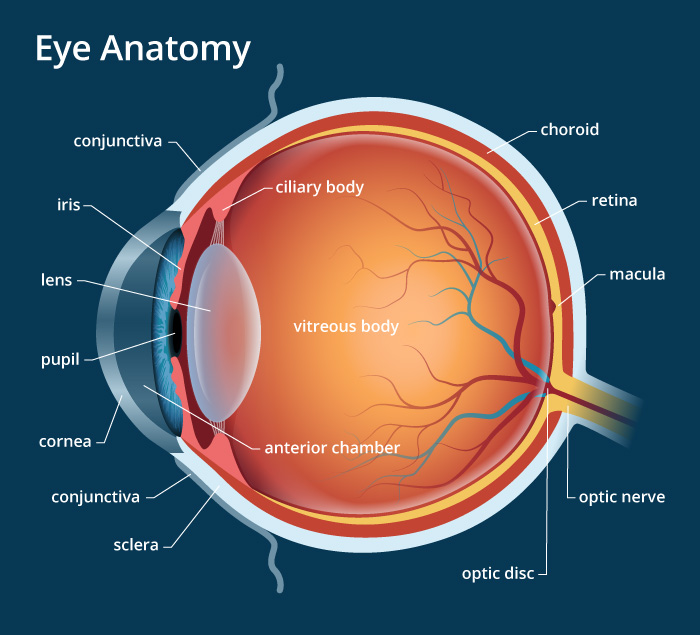

In human visual perception, the eyes act as the sensor or camera, neurons act as the connecting cable and the brain acts as the processor.

Structure of Eye

The human eye is a slightly asymmetrical sphere with an average diameter of the length of to . It has a volume of about .

Light enters the eye through a small hole called the pupil, a black looking aperture having the quality of contraction of eye when exposed to bright light and is focused on the retina which is like a camera film.

The lens, iris, and cornea are nourished by clear fluid, know as anterior chamber. Balance of aqueous production and absorption controls pressure within the eye.

Cones in eye number between to million which are highly sensitive to colors. Cones helps us visualize color.

Rods in eye number between to million which sensitive to low levels of illumination. Rods helps us visualize light intensity i.e. grayscale.

Image Formation

The lens of the eye focuses light on the photoreceptive cells of the retina which detects the photons of light and responds by producing neural impulses.

The distance between the lens and the retina is about and the focal length is approximately to .

Image Sensing and Acquisition

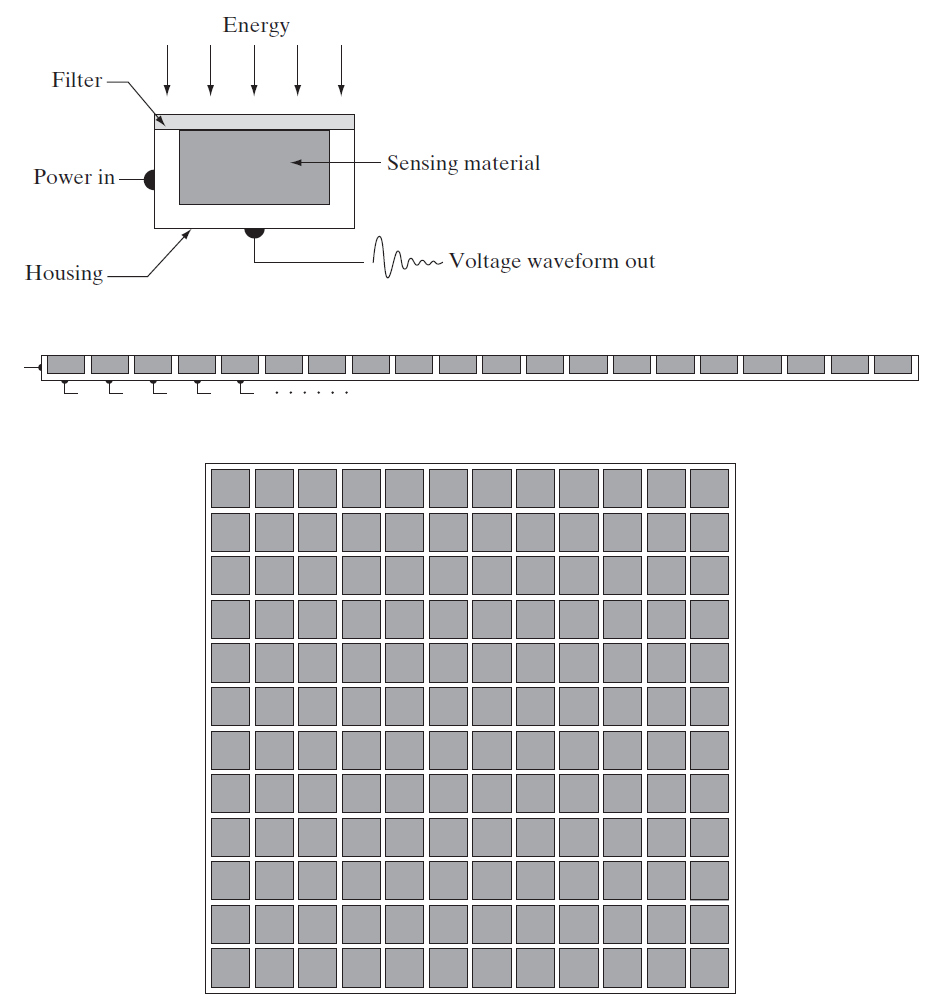

Incoming energy is transformed into a voltage by the combination of input electrical power and sensor material that is responsive to the particular type of energy being detected. Example, camera detects wavelength intensity in the visible spectrum.

An array of these sensors measures energy across length and width .

- The sensing array then moves a certain distance, giving the array new set of inputs. Doing this sweep along the entire area gives us an image matrix .

NOTE

This process produces an analog voltage signal, which must then be sampled and quantized to produce a digital image.

Image Sampling and Quantization

Sampling

Continuous-time analog signal is sampled by measuring its amplitude at a discrete instants spaced uniformly in time .

As long as the sampling of the analog signal is taken with a sufficiently high frequency (higher than the minimum Nyquist rate of twice the signal largest frequency), there is no loss in information as a result of taking discrete samples.

Quantization

The sample values have to be quantized to a finite number of levels, and each value can then be represented by a string of bits.

For example, color channels in

JPEGimages are usually quantized in levels of intensity.

Encoding

- The quantized signal is then encoded into a standardized image format:

PNG,JPEG,RAW,TIFF.

Relation Between Pixels

Neighbors

- horizontal + vertical neighbors.

- diagonal neighbors.

- .

Path

- Path minimum distance path using movement.

- Path minimum distance path using movement.

- Path ⟶ N4 movement on priority; movement only if movement is not possible.

- If path exists, path equals path by definition.

Distance

Euclidean distance

- Straight line distance between two points.

Cityblock distance

- path distance

Chessboard distance

- Longest distance along one axis

Distance CODE

% Artificial image

img = zeros(4, 4);

img(3, 3) = 1;

disp(img);

% Distance

distEu = bwdist(img, "euclidean")

distCb = bwdist(img, "cityblock")

distCh = bwdist(img, "chessboard")

Linear and Non-Linear Operations

H(af + bg) = aH(f) + bH(g)

H⟶ operationaandb⟶ scalersfandg⟶ imagesLinear operation ⟶ satisfies this condition and therefore can be expressed using convolution and frequency shaping

Non-linear operation ⟶ does not satisfy this condition

Numericals on Scanning

Important info

- n-bit depth of an image corresponds to colors/levels.

- Packet with

[start | data | end]increase the n-bit depth bystart + end, usually . - Baud rate is basically the speed of scan.

- For good quality scans, a minimum of sampling resolution is required.

- =